How can a software application succeed in a fast-changing digital environment where user demands can increase rapidly? Scalability is a challenge that many developers and organizations face. A sudden surge of users can overload systems, leading to performance problems and unhappy customers. However, with the right approach to software scalability, businesses can meet these demands and ensure long-term growth. In this article, we’ll explore key practices to achieve scalability in IT, offering practical advice for developers on building systems that can handle future challenges.

What is Scalability in Software Engineering?

Scalability in IT refers to how easily a system, application, or network handles increased workloads faster and with less trouble. This is critical for organizations aiming to respond to fluctuations in user demand or data. A scalable system can improve as it escalates with more users, data, or transactions.

What is scalability in software? It refers to the ability of a system to grow in capacity and efficiently handle increased loads while maintaining performance. There are two main types of scalability: vertical and horizontal. Vertical scalability (or scaling up) focuses on improving the existing capabilities of hardware or software, such as increasing the server's processing power. Horizontal software scalability (or scaling out) means adding more machines or nodes to carry the load of more distributed systems to balance the load. Both methods assist organizations to work around an increasing demand without straining resources.

Scalability in software isn’t just about adding resources—it’s about thoughtful planning and design. Key elements that influence scalability include:

- Capacity. The system should be able to support many concurrent users without performance issues.

- Data management. Scalable systems must efficiently manage increasing amounts of data, ensuring that performance stays stable as the data grows.

- Code Logic. The code must allow for modification and expansion such that new features or improvements will not necessarily require a complete overhaul of the entire code.

Any company that is determined to sustainably further its growth needs to consider how to respond to the pressure of scale. It allows for adaptability and enables you to remain competitive without incurring costs on completely redesigning your IT structure.

Planning for Software Scalability

One key consideration when developing software is how to make it scalable. This involves considering scalability in software architecture to ensure the system can adapt to future demands. First, we'll evaluate the current system, then we'll plan the system architecture. Let's pull this information together and see how it works.

Initial Assessment

To create a plan that will allow the system to develop software scalability, first consider the system that is currently in place. This helps to identify constraints and envision growth. Consider these four categories:

- Performance. Determine what the system does today. Examine response times, throughput, and peak loads of the system. These factors will reveal potential weaknesses if and when user traffic increases.

- User growth projections. Ascertain how many users are expected to increase, taking into consideration market trends and business objectives. This will aid in making scaling decisions by understanding how many users will be on the system at any given time.

- Resource utilization. Look at how the system’s hardware and software resources, such as servers and databases, are used. Identify any parts that are either underused or overloaded.

- Technical debt. Consider any outdated technology or code that might slow down software scalability. This may include old systems or software that could cause issues as the system grows.

This type of evaluation is a great start to scale the system and understand how it's structured.

Architecture Design

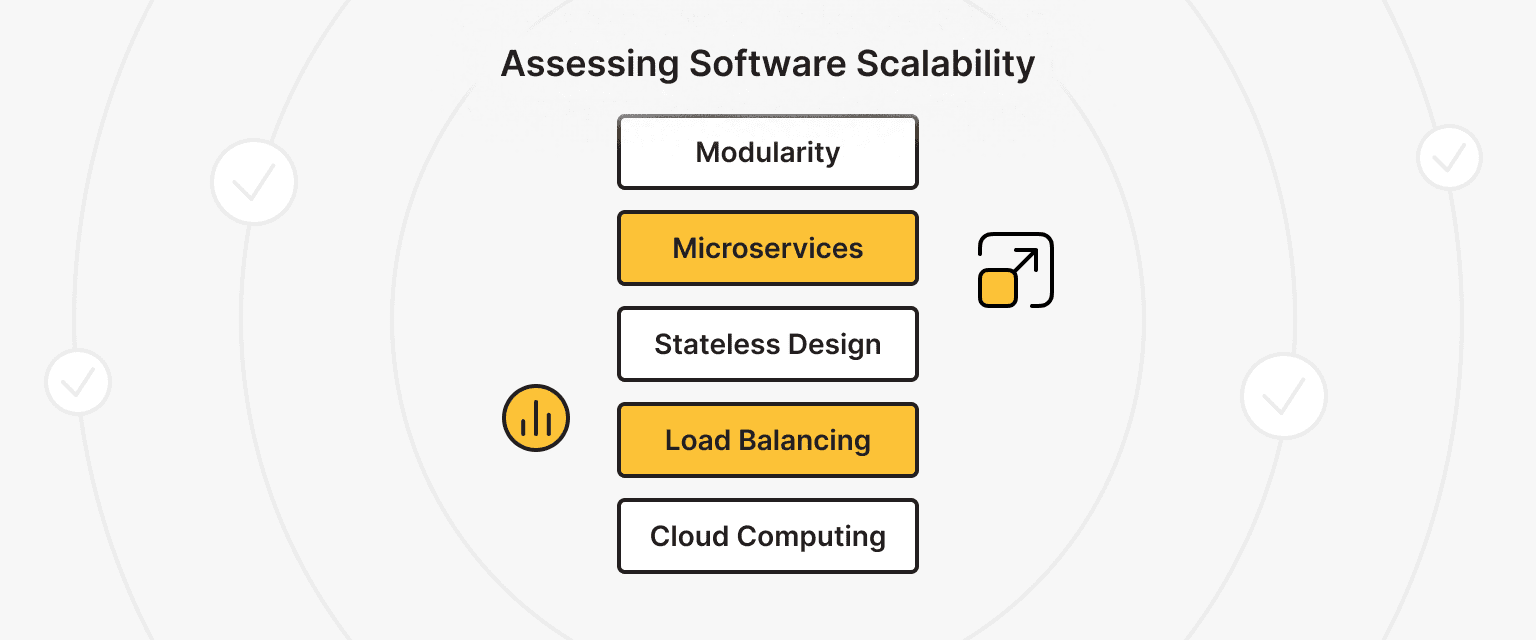

After the initial assessment, the next step is designing a scalable architecture. Key principles to follow include:

- Modularity. Construct the system from several modules so that each can be built, verified, and expanded independently. This simplifies changes and repairs, and it substantially reduces the chances of system-wide disasters.

- Microservices. The system can be separated into small independent services with the help of microservices. Each independent service responds when there is demand, enhancing elasticity and resilience. This approach is crucial for achieving IT infrastructure scalability, allowing for easier adjustments to resource allocation.

- Stateless design. Each request contains all the necessary information. This means every request is relevant and can be processed on many servers.

- Load balancing. Load balancing distributes incoming traffic to prevent servers from being overwhelmed. In this case, the chances of finding a bottleneck are reduced and the total system performance increases.

- Cloud computing. Cloud infrastructure enables on-demand resource allocation, so companies don’t have to purchase hardware in advance and deploy additional resources unless they are really required.

Using these principles in the design stage allows organizations to build easily extensible systems and adapt to changes without getting left behind.

Software Scalability Strategies

When building a system with future expansion in mind, applying techniques that allow for growth without compromising performance is essential. Key areas to consider include database management, load management, and cloud management. Each plays a crucial role in creating a platform that can handle increasing volumes.

Management of the Database

Proper database management is important because it can be a source of scalability bottlenecks when large volumes of data or many users have to be handled at the same time. Some performance-enhancing measures that can be employed in the databases are listed below.

- Horizontal scaling. Instead of relying on a single server or instance, the database load can be shared by many servers. This approach is often essential for scalable software since it allows for techniques like sharding, which splits large data into smaller ‘shards’ and houses them in different locations, enhancing system performance and efficiency.

- Replication. Create multiple copies of your database through replication to increase redundancy and ensure high availability. Depending on your needs, this can be done synchronously or asynchronously to balance data consistency and fault tolerance, which is vital for maintaining scalable software that can handle growth effectively.

- Caching. Implement caching to store frequently accessed data in memory, reducing the need for repeated database queries and speeding up retrieval times.

- Regular monitoring. Pay attention to the performance of your database in terms of metrics such as query response times and resource utilization. Since many issues can be identified at this point, it is possible to adjust the settings in advance to avoid them in the future or contain them before they spiral out of control.

Reliable storage for backups of your projects. is*hosting guarantees data protection.

Load Balancing

Load balancing distributes incoming network traffic across multiple servers, preventing any one server from becoming overloaded. This ensures better system performance and reliability, especially during peak traffic times. Here are some important elements of load balancing:

- Dynamic load balancing. Dynamic load balancing applies algorithms such as Weighted Round Robin or Shortest Response Time to balance traffic based on load. This ensures that requests do not get overloaded on one server, allowing for the optimization of resources in scalable software.

- Session persistence. In applications like online shops, where users require session continuity, session persistence ensures that all user requests are routed to the same server. This enables a continuous and consistent user experience.

- Health checks. These health checks are designed to observe or monitor server performance degradation, and can therefore detect all possible failures. For instance, when a server is down or when a server encounters an overload, traffic is absorbed by the load balancer and rerouted.

- Scalable load balancers. Cloud-based or software load balancers simplify scaling as demand increases. Many of these tools have an auto-scaling optimization feature that changes resource distribution depending on the amount of traffic.

Now let’s move on to the typical cloud solution.

Cloud Solutions

With cloud computing, business scalability has taken a different approach as it provides resources with flexible usage. Some features of cloud solutions include:

- Elastic scalability in software. By using cloud services customers can effectively and efficiently scale workloads up or down. This approach is ideal for scalable software, allowing clients to pay only for what they use while maintaining high performance during peak demand.

- Global reach. Data centers are located in a number of regions, so applications can be distributed to users in the area, reducing latency.

- Managed services. Cloud platforms simplify scaling up by providing additional services, such as database and load balancers. These services are managed, so they don't increase the workload for development teams.

- Cost efficiency. Using cloud technology reduces expenses on things like hardware and its maintenance. Even as technology evolves, cloud options help keep costs down because you only pay for what you use.

This method has the advantage of optimizing the performance, stability, and availability of applications. All these factors are important when requirements increase, because of good database management, load distribution, and cloud interventions.

Agile Practices for Software Scalability

Application of agile framework such as Scrum, Kanban, and Extreme Programming (XP) significantly enhances a team’s ability to scale. This approach has several benefits, including the following:

- Cross-Functional Teams. Agile frameworks promote the formation of multi-skilled teams who can work together to address software scalability issues from different perspectives.

- Iterative Development: Agile impact is considered at each stage of the development process. This is achieved by performing some system functionality with the help of an agile methodology that focuses on short sprints, ensuring the creation of scalable software.

- Continuous Integration and Delivery (CI/CD). With CI/CD, teams can automate testing and deployment, speeding up feature releases while keeping software scalability in focus with every update.

For example, the Scaled Agile Framework (SAFe) is used to help unite different teams in large organizations toward shared goals. Large Scale Scrum (LeSS) is a framework for applying Scrum principles on bigger projects, while Disciplined Agile Delivery (DAD) has its own set of customizable procedures to address ongoing issues.

Testing for Software Scalability

Testing for scalability is an integral step in any software development lifecycle, as it ensures that applications can withstand increased load without degrading system performance or user experience. This phase involves testing a system with various scenarios to assess its effectiveness under growing demands related to users, transactions, or data.

The overall objective of software scalability testing is to examine certain system features under stress, search for bottlenecks, and evaluate stress levels. It ascertains the maximum load that can benefit the application, creates conditions where users experience no effects of peak load periods, and assists in the economical management of application resources by determining strategies for scaling and resource allocation.

Key Metrics for Scalability Testing

Key metrics for software scalability testing focus on how well a system handles increasing loads. Key parameters to be monitored include:

- Response time is the duration the system takes to fulfill a request and return a response.

- Throughput determines the system’s efficiency by assessing the total number of requests or transactions completed at a given duration.

- Resource utilization encompasses the central processing unit, memory, disk, and networks, as well as how these components are utilized when the system is under pressure.

- Error rate refers to the percentage of transaction requests or transactions that failed. Failed transactions are the most critical issues that should be rectified before a system goes live.

Various Ways to Conduct Software Scalability Testing

To do scalable testing right, you've got to have a plan and stick to it. Here are some ideas to help you out:

- Define clear objectives. Identify the purpose of each objective to provide scope and identify the goals of scalability testing. For example, set objectives such as the maximum number of concurrent users for a site's performance or occurrence under certain measurements.

- Simulate realistic loads. Remember to apply tools to measure and ease users’ environment and activity behaviors. That means altering the types of users, their places, devices, and even network conditions.

- Utilize automated testing tools. Employ tools such as Apache JMeter or LoadRunner to generate the required load and measure performance metrics efficiently. Such tools may create thousands of virtual users that will use the application concurrently with thousands of real users.

- Monitor performance metrics. Proper performance tracking is crucial during software scalability testing since it helps detect the system's weak points and strengths. We can recommend Application Performance Management (APM) tools for this step.

- Analyze results thoroughly. It is usually very necessary to analyze data after any type of testing so that performance problems and resource issues can be diagnosed. Utilize this data to improve the design of your application and its supporting infrastructure.

Okay, the concept of scaling now looks clear on paper, but how do large companies tackle this challenge? Let's find out!

Case Studies of Scalability in Software

Here are a few case studies demonstrating effective software scalability and highlighting the importance of combining creativity with good planning, to provide clear examples of software scalability.

Sysco

Sysco, one of the largest broadline food distributors in the United States, faced serious troubles when transferring its large sales staff to the digital space. To solve these problems, Sysco started using more advanced approaches to the issue of database scalability and overall scalability in IT infrastructure. By incorporating new architectural designs and algorithm optimization, Sysco took control of its extensive data requirements.

The key measures that Sysco undertook included:

- Database sharding. This involves isolating a single database into several smaller, easier-to-manage databases, enabling horizontal scaling. This strategy helped the system functionally expand its capacity and bear more loads.

- Replication strategies. With the incorporation of both synchronous and asynchronous forms of replication, Sysco achieved redundancy and high availability across its database systems. This was important for the business since it ensured no downtime even during peak operational periods.

These strategic measures enhanced the efficiency of operations of Sysco Company and prepared the company for future growth in a competitive business setting.

The growth of Facebook into a key social media network illustrates performance achieved through a form of horizontal scaling. With billions of users interacting with the platform daily, Facebook adopted scalable software solutions and a robust architecture to distribute workloads across thousands of servers seamlessly. Facebook's major strategies included the following:

- Load distribution. With the use of certain load balancing techniques, Facebook was able to direct user requests to different servers. No server was stressed beyond a limit.

- Microservices architecture. The transition from a single monolithic application to a microservices architecture gave Facebook reasonably on-demand scaling of different services. This flexibility has been determined useful when control of the growth of the platform within is becoming a hassle.

As a result of these tactics, Facebook has achieved retention of high-performance reliability despite the constant rise in user engagement.

Google's approach to cloud scalability highlights the effectiveness of modern cloud solutions. Google Cloud is built for resilience, ensuring seamless operations even during major outages or data center failures. The key components of Google’s strategy include:

- Global infrastructure. Google data centers are strategically located so traffic load can be shared geographically. This also helps to improve performance by poor latency and redundancy.

- Advanced auto-scaling. Thanks to the cloud-centric technologies deployed, Google Cloud administrators manually increase or decrease resources according to the demand for the products.

- Easy access to better monitoring tools. Monitoring and analytics are done continuously and at all times so that the status of the service is active and timely, and interventions can be done on performance whenever appropriate.

These practices enhance Google’s ability to handle enormous quantities of data and traffic with remarkable reliability and efficiency.

Get the most out of your budget with our affordable, efficient VPS solutions. Fast NVMe, 30+ countries, managed and unmanaged VPS.

Conclusion

IT scalability is a major factor in determining whether software will flourish in today’s digital age. With increasing and evolving user needs, systems’ ability to scale up and handle a higher load without performance issues is a primary determinant. This article discusses the important practices required for achieving software scalability, including key practices in mapping out, architectural design, and testing.

To recap, successful software scalability involves:

- Capacity Management. Maintaining a suitable level of performance in the systems when many users and considerable information are processed.

- Data Management. Managing the bulk of information efficiently with minimal performance degradation.

- Code Structure. Architecting the program in a manner that will be flexible and easy to alter.

Planning for software scalability starts with assessing current capabilities, designing scalable architecture, and applying key strategies like database management, load balancing, and cloud solutions. If you bring these practices into your development process, you'll set your systems up for long-term growth and success.

Dedicated Server

You get smooth operation, high performance, easy-to-use setup, and a complete solution for your needs.

From $70.00/mo